Compute a two-level decision tree using the greedy approach described in this chapter. Use the classification error rate as the criterion for splitting. What is the overall error rate of the induced tree?

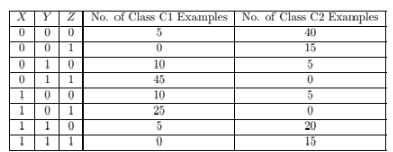

Consider the following set of training examples.

Splitting Attribute at Level 1.

To determine the test condition at the root node, we need to com-

pute the error rates for attributes X, Y , and Z. For attribute X, the

corresponding counts are:

Therefore, the error rate using attribute X is (60 + 40)/200 = 0.5.

For attribute Y , the corresponding counts are:

Therefore, the error rate using attribute Y is (30 + 30)/200 = 0.3.

Therefore, the error rate using attribute Y is (40 + 40)/200 = 0.4.

For attribute Z, the corresponding counts are:

Therefore, the error rate using attribute Y is (30 + 30)/200 = 0.3.

Since Z gives the lowest error rate, it is chosen as the splitting attribute

at level 1.

Computer Science & Information Technology

You might also like to view...

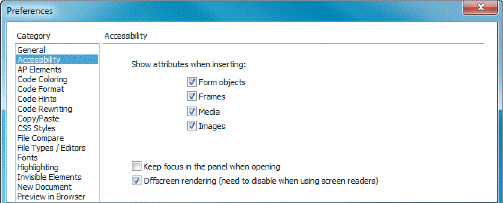

The accessibility attributes for page design, as shown in the accompanying figure, are important because on May 5, 1999, the Web Content Accessibility Guidelines were published by ____.

The accessibility attributes for page design, as shown in the accompanying figure, are important because on May 5, 1999, the Web Content Accessibility Guidelines were published by ____.

A. DARPA B. NSA C. the NSF D. the W3C

Match the following terms to another common name:

I. laptop computer II. personal computer III. smartphone IV. dumb terminal V. CPU A. cellular telephone B. microprocessor C. microcomputer D. notebook computer E. point-of-sale terminal

Match the forensic tool with its description

I. Paraben software A. Considered to be the gold standard II. FTK B. Highly regarded and able to generate detailed reports III. Logicube C. Considered most reliable for hardware IV. Guidance software D. Has Faraday bags

Which type of cable would you use if you were concerned about EMI?

a. Plenum-rated b. UTP c. STP d. Coaxial